How hard is it to build your own top performance quantum circuit simulator? Does it really needs thousands of lines of code to implement it?

At least in Julia language, you don’t! We can easily achieve top performance via a few hundreds of code while supporting

CUDA and symbolic calculation.

Like my previous blog posts, you can do it in ONE DAY as well. I’ll introduce how to do this with Julia language while going

through some common tricks for high performance computing in Julia language. I won’t talk much about the Julia language itself

or it will be a very long blog post, thus if you want to follow this blog post but you don’t know how to use the Julia programming

language yet, I would suggest you to checkout materials here first.

Background

Quantum computing has been a popular research topic in recent years. And building simulators can be useful for related research. I’m not going to give you a full introduction of what is quantum computing in this blog post, but I find you this nice tutorial from Microsoft if you are

interested in knowing what is the quantum computing. And you don’t really need to understand everything about quantum computing to follow this blog post - the emulator itself is just about special matrix-vector or matrix-matrix multiplication.

So to be simple, simulating quantum circuits, or to be more specific simulating how quantum circuits act on a quantum register, is about how to calculate large matrix-vector multiplication that scales exponentially. The most brute-force and accurate way of doing it via full amplitude simulation, which means we do this matrix-vector multiplication directly.

The vector contains the so-called quantum state and the matrices are quantum gate, which are usually small. The diagram of quantum circuits is a representation of these matrix multiplications. For example, the X gate is just a small matrix

$$

\begin{pmatrix}

0 & 1\\

1 & 0

\end{pmatrix}

$$

In theory, there is no way to simulate a general quantum circuit (more precisely, a universal gate set) efficiently, however, in practice, we could still do it within a rather small scale with some tricks that make use of the structure of the gates.

To know how to calculate a quantum circuit in the most naive way, we need to know two kinds of mathematical operations

Tensor Product/Kronecker Product, this is represented as two parallel lines in the quantum circuit diagram, e.g

and by definition, this can be calculated by

$$

\begin{pmatrix}

a_{11} & a_{12} \\

a_{21} & a_{22}

\end{pmatrix} \otimes

\begin{pmatrix}

b_{11} & b_{12} \\

b_{21} & b_{22}

\end{pmatrix} =

\begin{pmatrix}

a_{11} \begin{pmatrix}

b_{11} & b_{12} \\

b_{21} & b_{22}

\end{pmatrix} & a_{12} \begin{pmatrix}

b_{11} & b_{12} \\

b_{21} & b_{22}

\end{pmatrix} \\

a_{21} \begin{pmatrix}

b_{11} & b_{12} \\

b_{21} & b_{22}

\end{pmatrix} & a_{22} \begin{pmatrix}

b_{11} & b_{12} \\

b_{21} & b_{22}

\end{pmatrix}

\end{pmatrix}

$$

Matrix Multiplication, this is the most basic linear algebra operation, I’ll skip introducing this. In quantum circuit diagram, this is represented by blocks connected by lines.

As a conclusion of this section, you can see simulating how pure quantum circuits act on a given quantum state is about how to implement some special type of matrix-vector multiplication

efficiently. If you know about BLAS (Basic Linear Algebra Subprograms), you will realize this kind of operations are only BLAS level 2 operations, which does not require any smart tiling

technique and are mainly limited by memory bandwidth.

So let’s do it!

Implementing general unitary gate

Thus the simplest way of simulating a quantum circuit is very straightforward: we can just make use of Julia’s builtin functions:kron and *.

1 | using LinearAlgebra |

However, this is obviously very inefficient:

- we need to allocate a $2^n \times 2^n$ matrix every time we try to evaluate the gate.

- the length of the vector can only be $2^n$, thus we should be able to calculate it faster with this knowledge.

I’ll start from the easiest thing: if we know an integer is $2^n$, it is straight forward to find out $n$ by the following method

1 | log2i(x::Int64) = !signbit(x) ? (63 - leading_zeros(x)) : throw(ErrorException("nonnegative expected ($x)")) |

this is because we already know how long our integer is in the program by looking at its type, thus simply minus the number of leading zeros would give us the answer.

But don’t forget to raise an error when it’s an signed integer type. We can make this work on any integer type by the following way

1 | for N in [8, 16, 32, 64, 128] |

the command @eval here is called a macro in Julia programming language, it can be used to generate code. The above code generates the implementation of log2i for signed

and unsigned integer types from 8 bits to 128 bits.

Let’s now consider how to write the general unitary gate acting on given locations of qubits.

1 | function broutine!(r::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}) where N |

this matrix will act on some certain qubits in the register, e.g given a 8x8 matrix we want it to act on the 1st, 4th and 5th qubit. Based on the implementation of X and Z we know this is about multiplying this matrix on the subspace of 1st, 4th and 5th qubit, which means we need to construct a set of new vectors whose indices iterate over the subspace of 0xx00x, 0xx01x, 0xx10x, 0xx11x etc. Thus the first thing we need to do is to find a generic way to iterate through the subspace of 0xx00x then by adding an offset such as 1<<1 to each index in this subspace, we can get the subspace of 0xx01x etc.

Iterate through the subspace

To iterate through the subspace, we could iterate through all indices in the subspace. For each index, we move each bit to its position in the whole space (from first bit to the last).

This will give us the first subspace which is 0xx00x.

Before we move on, I need to introduce the concept of binary masks: it is an integer that can help us “filter” out some binary values, e.g

we want to know if a given integer’s 4th and 5th bit, we can use a mask 0b11000, where its 4th and 5th bit are 1 the rest is 0, then we

can use an and operation get get the value. Given the location of bits, we can create a binary mask via the following bmask function

1 | function bmask(itr) |

where itr is some iterable. However there are quite a few cases that we don’t need to create it via a for-loop, so we can specialize this function

on the following types

1 | function bmask(range::UnitRange{Int}) |

however, we maybe want to make the implementation more general for arbitrary integer types, so let’s use a type variable T!

1 | function bmask(::Type{T}, itr) where {T<:Integer} |

However after we put a type variable as the first argument, it is not convenient when we just want to use Int64 anymore,

let’s create a few convenient methods then

1 | bmask(args...) = bmask(Int, args...) |

The final implement would look like the following

1 | bmask(args...) = bmask(Int, args...) |

To move the bits in subspace to the right position, we need to iterate through all the contiguous region in the bitstring, e.g for 0xx00x, we

move the 2nd and 3rd bit in subspace by 3 bits together, this can be achieved by using a bit mask 001 and the following binary operation

1 | (xxx & ~0b001) << 1 + (xxx & 0b001) # = xx00x |

we define this as a function called lmove:

1 | lmove(b::Int, mask::Int, k::Int)::Int = (b & ~mask) << k + (b & mask) |

we mark this function @inline

here to make sure the compiler will always inline it,

now we need to generate all the masks by counting contiguous region of the given locations

1 | function group_shift(locations) |

now to get the index in the whole space, we simply move each contiguous region by the length of their region,

1 | for s in 1:n_regions |

where the initial value of index is the subspace index, and after the loop, we will get the index in the whole space.

Now, since we need to iterate the all the possible indices, it would be very convenient to have an iterator, let’s implement

this as an iterator,

1 | struct BitSubspace |

and we can construct it via

1 | function BitSubspace(n::Int, locations) |

now, let’s consider the corresponding whole-space index of each index in the subspace.

1 | function Base.getindex(it::BitSubspace, i) |

now let’s overload some methods to make this object become an iterable object

1 | Base.length(it::BitSubspace) = it.n |

let’s try it! it works!

1 | julia> for each in BitSubspace(5, [1, 3, 4]) |

Multiply matrix in subspace

now we know how to generate the indices in a subspace, we need to multiply the matrix to each subspace,

e.g for a unitary on the 1, 3, 4 qubits of a 5-qubit register, we need to multiply the matrix at 0xx0x,0xx1x, 1xx0x and 1xx1x. Thus we can create the subspace of x00x0 by BitSubspace(5, [1, 3, 4])

and subspace of 0xx0x by BitSubspace(5, [2, 5]), then add each index in x00x0 to 0xx0x, which looks like

1 | subspace1 = BitSubspace(5, [1, 3, 4]) |

now we notice subspace2 is the complement subspace of subspace1 because the full space if [1, 2, 3, 4, 5], so let’s redefine our BitSubspace

constructor a bit, now instead of define the constructor BitSubspace(n, locations) we define two functions to create this object bsubspace(n, locations) andbcomspace(n, locations) which stands for binary subspace and binary complement space, the function bsubspace will create subspace1 and the functionbcomspace(n, locations) will create subspace2.

They have some overlapping operations, so I move them to an internal function _group_shift

1 | function group_shift(locations) |

thus our routine will look like the following

1 | function broutine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}) where N |

the log2dim1 is just a convenient one-liner log2dim1(x) = log2i(size(x, 1)). And we use @inbounds here to tell the Julia compiler

that we are pretty sure all our indices are inbounds! And use @views to tell Julia we are confident at mutating our arrays so please

use a view and don’t allocate any memory!

Now you may notice: the iteration in our implementation is independent and may be reordered! This means we can easily make this parallel. The simplest way to parallelize it is via multi-threading. In Julia, this is extremely simple,

1 | function threaded_broutine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}) where N |

but wait, this will give you en error MethodError: no method matching firstindex(::BitSubspace), this is simply because

the @threads wants calculate which indices it needs to put into one thread using firstindex, so let’s define it

1 | Base.firstindex(::BitSubspace) = 1 |

thus the final implementation of subspace would looks like the following

1 | function _group_shift(masks::Vector{Int}, shift_len::Vector{Int}, k::Int, k_prv::Int) |

here I changed the definition of struct BitSubspace to store the number of bits in fullspace so that we can print it nicely

1 | function Base.show(io::IO, ::MIME"text/plain", s::BitSubspace) |

let’s try it!

1 | julia> bsubspace(5, (2, 3)) |

Implement controlled gate

Now I have introduced all the tricks for normal quantum gates, however, there are another important set of gates which is controlled gates.

There are no new tricks, but we will need to generalize the implementation above a little bit.

General controlled unitary gate

Controlled unitary gate basically means when we see an index, e.g 010011, except applying our unitary matrix on the given location (e.g 1 and 2), we need to look

at the control qubit, if the control qubit is 0, we do nothing, if the control qubit is 1 we apply the matrix. (for inverse control gate, this is opposite)

Thus, this means the subspace we will be looking at contains 2 parts: the bits on control locations are 1 and the bits on gate locations are 0. We can define our

offset as following:

1 | ctrl_offset(locs, configs) = bmask(locs[i] for (i, u) in enumerate(configs) if u != 0) |

and the corresponding routine becomes

1 | function routine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}, ctrl_locs::NTuple{M, Int}, ctrl_configs::NTuple{M, Int}) where {N, M} |

Optimize performance for small matrices

In most cases, the matrices of unitary gates we want to simulate are usually very small. They are usually of size 2x2 (1 qubit),4x4 (2 qubit) or 8x8 (3 qubit). In these cases, we can consider using the StaticArray which is much faster than openBLAS/MKL for

small matrices, but we don’t need to change our routine! implementation, since Julia will specialize our generic functions

automatically:

1 | using BenchmarkTools, StaticArrays |

and we can see the benchmark

1 | julia> broutine!(r, $U1, $locs) setup=(r=copy($st)) |

Using StaticArray is already very fast, But this is still space to optimize it, and because StaticArray will

store everything as a type in compile time, this will force us to compile things at runtime, which can make the first

time execution slow (since Julia uses just-in-time compilation,

it can specialize functions at runtime). Before we move forward, let me formalize the problem a bit more:

Now as you might have noticed, what we have been doing is implementing a matrix-vector multiplication but in subspace,

and we always know the indices inside the complement space, we just need to calculate its value in the full space, and

because of the control gates, we may need to add an offset to the indices in full space, but it is 0 by default,

thus this operation can be defined as following

1 | function subspace_mul!(st::AbstractVector{T}, comspace, U, subspace, offset=0) where T |

now let’s implement this in a plain for loop, if you happened to forget how to calculate matrix-vector multiplication,

an einstein summation notation may help:

$$

st_{i_1,i_2,\cdots, p, \cdots, i_{n-1}, i_{n}} = U_{p,q} st_{i_1,i_2,\cdots, q, \cdots, i_{n-1}, i_{n}}

$$

where $U$ is a $2\times 2$ matrix and $p, q$ are indices in our subspace. Now we can write down our subspace multiplication

function

1 | function subspace_mul!(st::AbstractVector{T}, comspace, U, subspace, offset=0) where T |

if you are familiar with BLAS functions, there is a small difference with gemv routine: because we are doing multiplication

in a large space, we need to allocate a small vector to store intermediate result in the subspace and then assign the intermediate

result to the full space vector.

Now let’s use this implementation in our broutine! function:

1 | function broutine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}) where N |

As you can see, it is faster now, but still slower than StaticArrays, this is because our compiler still has no access to the shape information

of your matrix

1 | julia> broutine!(r, $U1, $locs) setup=(r=copy($st)) |

A direct observation is that the inner loop has a very small size in the case of quantum gates

1 | for i in 1:size(U, 1) |

if U is a 2x2 matrix, this can be written as

1 | T1 = U[1, 1] * st[idx[1]] + U[1, 2] * st[idx[2]] |

first you will find we don’t need our intermediate array y anymore! And moreover, notice that the order of T1 and T2 doesn’t matter

for this calculation, which means in principal they can be executed in parallel! But this is an inner loop, we don’t want to waste our

multi-thread resources to parallel it, instead we hope we can have SIMD. However, we don’t have to

call SIMD instructions explicitly, because in fact the compiler

can figure out how to use SIMD instructions for the 2x2 case itself, since it’s very obvious, and also because we have implicitly implied that we only

have a matrix of shape 2x2 by expanding the loop. So let’s just trust our compiler

1 | function subspace_mul2x2!(st::AbstractVector{T}, comspace, U, subspace, offset=0) where T |

we can do similar things for 4x4 and 8x8 matrices, implementing them is quite mechanical, thus we will seek some macro magic

now

1 | function subspace_mul4x4!(st::AbstractVector{T}, comspace, U, subspace, offset=0) where T |

In Julia the macro Base.Cartesian.@nextract will generate a bunch of variables like indices_1, indice_2 etc.

automatically at compile time for us, so we don’t need to do it ourselves. And then we can use Base.Cartesian.@nexprs

to implement the matrix multiplication statements and assign the values back to full space vector st. If you have questions

about how to use Base.Cartesian.@nextract and Base.Cartesian.@nexprs you can use the help mode in Julia REPL to check their

documentation. Now we will want to dispatch the method subspace_mul! to these specialized methods when we have a 2x2, 4x4

or 8x8 matrix, so we move our original plain-loop version subspace_mul! to a new function subspace_mul_generic!,

and dispatch methods based on the matrix size

1 | function subspace_mul!(st::AbstractVector{T}, comspace, U, subspace, offset=0) where T |

if we try it on our previous benchmark, we will see we are faster than StaticArrays now!

1 | julia> broutine!(r, $U1, $locs) setup=(r=copy($st)) |

now since most of the quantum gates are 2x2 matrices, we will focus more on this case, recall that in the 2x2 matrix case,

there is only one location to specify, this will allow us to directly iterate through the subspace by adding up 2^loc, where

the variable loc is the integer represents the location of this gate. This will get us rid of all the heavier BitSubspace struct.

1 | function broutine2x2!(st::AbstractVector{T}, U::AbstractMatrix, locs::Tuple{Int}) where T |

let’s compare this and subspace_mul2x2!, to be fair we will directly call broutine! and it will call subspace_mul! then dispatch to subspace_mul2x2!.

1 | julia> U = rand(ComplexF64, 2, 2); |

this is only a little bit faster. Hmm, this is not very ideal, but notice that because step_1 can

be very small and it is an inner loop, we can then unroll this loop as long as it is small, so we can

now manually write

1 | function broutine2x2!(st::AbstractVector{T}, U::AbstractMatrix, locs::Tuple{Int}) where T |

the last loop is also partially unrolled by slicing our iteration range.

1 | julia> broutine2x2!(r, $U, $locs) setup=(r=copy($st)) |

this is now much faster than subspace_mul2x2!, as you see, by slightly change the abstraction

we implement, we exposed a small loop that can be unrolled! So let’s delete our subspace_mul2x2!

and use this method instead:

1 | function broutine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}) where N |

now let’s think about how to unroll the small matrix for the controlled gate case: the term controlled gate simply means

when we see there is 1 (or 0 for inverse control) at the control location, we apply the matrix in subspace, or we don’t.

so we can just check the control location’s configuration inside the loop, to do this we can create two masks: a control

location mask ctrl_mask and a control flag mask flag_mask

1 | ctrl_mask = bmask(ctrl_locs) |

then we just need to check the bits on ctrl_locs to see if they are the same with flag_mask, we can implement a functionismatch to do this

1 | ismatch(index::T, mask::T, target::T) where {T<:Integer} = (index & mask) == target |

thus the implementation will look very similar to the un-controlled one, although it is evil to

copy-past, to be able to implement it within a day, I’ll just do so

1 | function broutine2x2!(st::AbstractVector, U::AbstractMatrix, locs::Tuple{Int}, ctrl_locs::NTuple{M, Int}, ctrl_configs::NTuple{M, Int}) where {N, M} |

let’s now compare the performance

1 | julia> U = rand(ComplexF64, 2, 2); |

Now the controlled single qubit gate routine is also improved a lot! Let’s dispatch to this too!

1 | function broutine!(st::AbstractVector, U::AbstractMatrix, locs::NTuple{N, Int}, ctrl_locs::NTuple{M, Int}, ctrl_configs::NTuple{M, Int}) where {N, M} |

Parallelize using Multi-threads

Now since we have implemented general matrix instructions, we should be able to simulate arbitrary quantum circuit. We can now parallel what we have implemented using multi-thread directly as we mentioned at the beginning. However, multi-threading is not always beneficial, it has a small overhead. Thus we may not want it when the number of qubits is not large enough.

We will implement a @_threads macro as following

1 | macro _threads(ex) |

Parallelize using CUDA

Now, we have implemented Pauli gates and a general matrix instructions. Let’s parallelize them using CUDA.jl. Since we are not using general purpose matrix multiplication anymore, we need to write our

own CUDA kernels, but this is actually not very hard in Julia, because we can reuse a lot code from our previous implementation.

But before we start doing this, let me explain what is a kernel function in the context of CUDA programming. As you might have known, GPU devices

are special chip designed for executing a lot similar tasks in parallel. These tasks can be described via a function. Executing the kernel function

on GPU is in equivalent to execute this function on CPU within a huge loop.

So as you have realized, this kernel function is exactly the same thing we unrolled in previous implementation. Thus we can quickly turn out previous CPU

implementation into GPU implementation by wrapping the kernel into a closure, which is very mechanical. Although, the best way to do this is to move the

overlapping part into a function, to demonstrate things more clearly in the blog post I just simply copy paste the previous implementation.

1 | function broutine!(st::CuVector{T}, U::AbstractMatrix, locs::Tuple{Int}) where T |

Benchmark

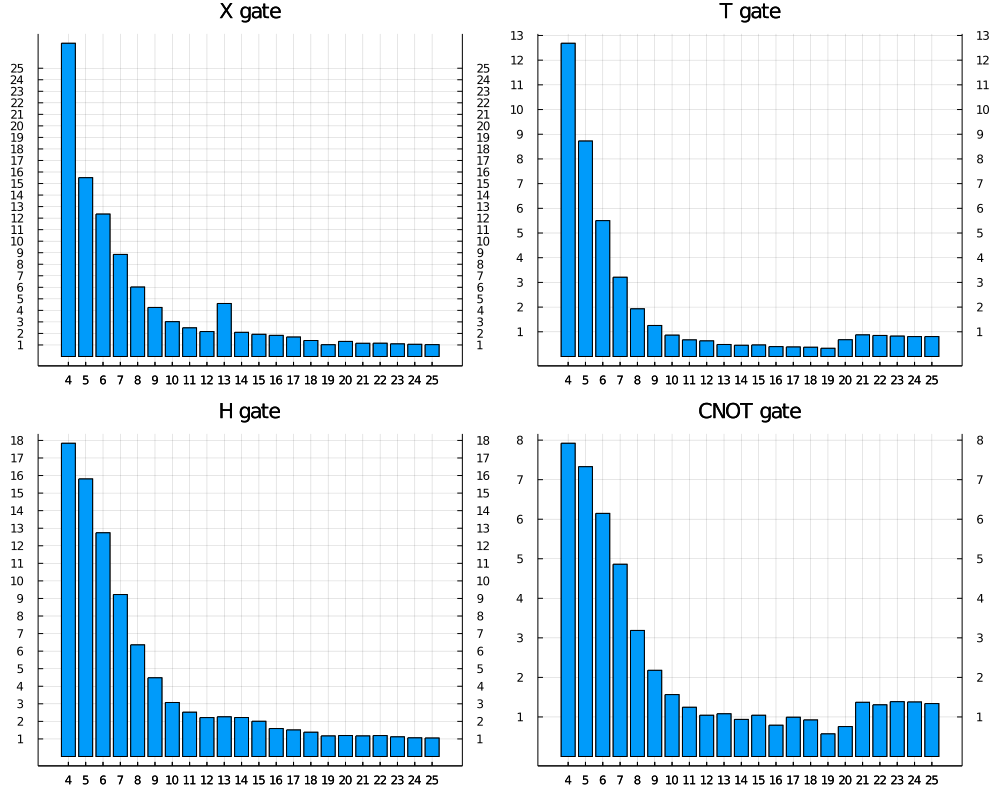

Now let’s see how fast is our ~600 line of code quantum circuit emulator. I don’t intend to go through a complete benchmark here

since the above implementation is generic it will has similar benchmark on different kinds of gates. And there are still plenty

of room to optimize, e.g we can specialize each routine for a known gate, such X gate, H gate to make use of their matrix structure.

The benchmark of multi-threaded routines and CUDA is currently missing since I don’t have access to a

GPU with ComplexF64 support to make the comparison fair. However, this blog post is a simple version of

YaoArrayRegister

in the Yao ecosystem, you can use the benchmark of Yao for reference. Or please also feel free to

benchmark the implementation and play with it in this blog post yourself for sure!

Let me compare this with one of the current best performance simulator qulacs, you should be able

to find relative benchmark comparing qulacs and other software here.

(I’m not comparing with Yao because the implementation is similar to what is implemented in Yao.)

first we clone the benchmark repo

1 | git clone https://github.com/Roger-luo/quantum-benchmarks.git |

then checkout to the stable release branch release-0.1

1 | cd quantum-benchmarks && git checkout release-0.1 |

this will prepare us the benchmark data on our machine. then we benchmark our own implementation

1 | using BenchmarkTools |

note: we always use minimum time as a stable estimator for benchmarks

now we plot the benchmark of X, H, T, CNOT in relative time, to see how good our own simulator is comparing to

one of the best Python/C++ based circuit simulator in single thread.

What’s more?

Recall our previous implementation, since we didn’t specify our matrix type or vector type

to be a Vector or other concrete type, and didn’t specify the element type has to be a ComplexF64 either,

this means ANY subtype of AbstractVector, and ANY subtype of Number can be used with the above methods.

Now we can do something interesting, e.g we can automatically get the ability of symbolic calculation by

feeding symbolic number type from SymEngine package or SymbolicUtils package.

Or we can use Dual number to perform forward mode differentiation directly. Or we can estimate error

by using the error numbers from Measurements.

Here is demo of using SymEngine:

1 | using SymEngine |

This is only possible when one is able to use generic programming to write

high performance program, which is usually not possible in the two-language solution Python/C++ without implementing one’s own

type system and domain specific language (DSL) compiler, which eventually becomes some efforts that reinventing the wheels.

Conclusion

Getting similar performance or beyond comparing to Python/C++ solution in numerical computation

is easily achievable in pure Julia with much less code. Although, we should wrap some of the overlapping

code into functions and call them as a better practice, we still only use less than 600 lines of code

with copy pasting everywhere.

Moreover, the power of generic programming will unleash our thinking of numerical methods on many different numerical types.

Experienced readers may find there may still rooms for further optimization, e.g we didn’t specialize much common gates yet, and the loop unroll size might not be the perfect size, and may still vary due to the machine.

Last, besides simulating quantum circuits, the above implementation of subspace matrix multiplication is actually a quite common routine happens frequently in tensor contraction (because quantum circuits are one kind of tensor network), thus more promising application can be using these routines for tensor contraction, however, to make these type of operations more efficient, it may require us to implement BLAS level 3 operation in the subspace which is the subspace matrix-matrix multiplication, which can require more tricks and more interesting.

I uploaded the implementation as a gist: https://gist.github.com/Roger-luo/0df73cabf4c91f9854657fdd2ed66767